Why Digital Health is Harder Than You Might Think: A Reality Check From the Clinical Trenches

Brennan Spiegel, MD, MSHS

Director of Health Services Research, Cedars-Sinai Health System

Digital Health in the Clinical Trenches: A Reality Check

We often hear that digital health is transforming medicine at a breathtaking pace. Each day brings news of an innovation poised to alter medicine in ways we could never have conceived just years ago. Technophiles announce that digital health advances such as electronic health records (EHR), mobile health (mHealth) applications, and wearable biosensors permit inexpensive and seamless data collection and processing, allowing previously unimaginable delivery of meaningful data from patients to healthcare providers, administrators and analysts. Put simply: We’re told that modern technologies are dramatically transforming healthcare for the better (example).

We need a reality check. This grand vision is not yet clear for many of us testing the science of digital health and seeking to understand how it will improve care. Digital health is at its infancy. For those who might disagree with this assessment, I would pose a simple litmus question:

Have you ever placed a digital device on an actual patient?

I ask this question because our research team at Cedars-Sinai spends a lot of time placing digital devices on patients. We’ve seen what happens next, and it’s not always what we expect. In fact, we are constantly surprised and perplexed by what we learn. I’d like to share some of those examples. These vignettes illustrate what we don’t know, and also what we don’t know we don’t know yet. None of this means we shouldn’t pursue digital health with vigor, because we should. But it does mean we need to study, test, observe, measure and update our assumptions with real-life experiences in actual patients. Digital health is hard. Here’s why:

Four “Real-Life” Examples of Why Digital Health is Really Hard

#1: Missteps with step counts

Our research team has been conducting a study to measure activity metrics in patients with rheumatoid arthritis undergoing medical treatment. The idea is to evaluate the correlation between activities – like step counts, maximum and minimum velocity and distance travelled – and patient report outcomes (PROs) – like fatigue, pain and joint stiffness. If patients’ self-reported health improves, then so should their step counts and mobility, right?

So, we obtained high-tech sensors from our colleagues at the UCLA Wireless Health Institute and put them on the limbs of patients undergoing treatment. Simple. Just wear them, keep them charged up and report the PROs daily; then we’ll see what we get.

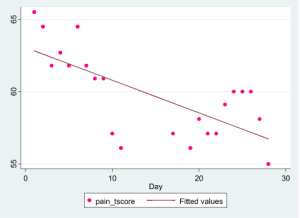

Our very first patient threw us for a loop. She is a 72-year-old caregiver to her disabled husband. She refuses to let her disease stop her from doing what she has to do, but regrets how it keeps her from her true passion: writing. She finally found relief during the treatment course in our study, and, sure enough, her pain, fatigue, and joint stiffness all improved dramatically over the course of 30 days. Take a look at her actual pain data, right here:

It’s easy to see that her pain improved. I’m not showing her other data, but her fatigue, joint stiffness, physical function, and other key outcomes also improved. No doubt about it: she felt better.

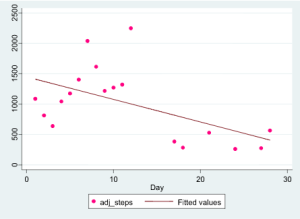

So, then what do you make of her step count data, measured during the exact same period and shown below?

You’re reading that right: she nearly stopped walking. She apparently put the brakes on basic ambulation at just the moment she felt gloriously better.

What gives? Our immediate reaction was that the data were wrong. It made no sense. She must not have used the sensors correctly. Or she must have taken them off for long periods during the day. Or maybe she put them on someone else. Or, perhaps, she really wasn’t feeling better, and we should trust the step counts but not the PRO results. There had to be some explanation.

What happened? Why did she report steady symptom improvement yet moved less and less? We figured out the answer only after speaking with the patient: the symptom improvement allowed her to return to her true work, writing. Stiffness and pain in her knees and ankles had previously prevented her from sitting at her computer for long periods of time, a necessary prerequisite for the type of work she was passionate about. Now, sitting at her desk for many hours and registering little movement beyond typing with her fingers was an “activity” she could return to with gusto. Diminished pain and stiffness in her knees and ankles allowed her to be less physically active, and achieve a greater quality of life in the process. Here is a telling quote from her interview:

Now when I sit down to work, I’ll work like 11 hours straight. You know, I’ll take bathroom breaks and go to eat, but then I go back to it, because when you’re hot, you’re hot, you know, you do it, and it was always sort of heartbreaking to have to stop.

Only by contextualizing her PRO and sensor data within our face-to-face interview did the data streams give a clear picture of her progress and its effects on her quality of life.

Had we been monitoring this patient from a remote e-coordination hub, keeping an eye on her steps as a surrogate measure of illness severity, we’d have been duped. Totally duped. Instead, her inactivity was evidence of her improvement. Our assumptions were violated – with the very first patient in our study.

Conclusion: Our most fundamental assumptions about digital health data can be laid to waste with a single patient. Who would have thought that walking and moving less is a good thing for a patient suffering from debilitating arthritis. In this case it was.

#2: “I’m on house arrest”

Not long after we recruited our first patient in the arthritis study, we began to find more unexpected results from others wearing the sensors. In particular, the sensor, which holds a small gyroscope in housing that’s the size of a small matchbox, caused most of our patients to cringe. One said: “I feel like I'm on house arrest.” He explained that having the device around his ankle felt like being on parole. This was not an isolated belief. In fact, four out of the first six patients in our trial agreed that there was a psychological burden to wearing the sensor on their ankles, and some took it off because they felt stigmatized and judged. Some offered preemptive explanations, anticipating that others would make assumptions and draw negative conclusions about them.

One patient made the obvious point that she could not wear the sensor over her affected joints, no matter how small the sensor was. Having anything near a “hot joint” was enough to make it unacceptable. No chance. Just not going to wear it there.

Conclusion: be prepared for unexpected consequences of putting devices on people. These findings may be obvious in retrospect, but they weren’t obvious going in. Again, our presuppositions about how “easy” or unobtrusive a device might be are easily violated.

#3: The “Virtual” virtual reality study

We’re conducting another study at Cedars-Sinai that involves using virtual reality (VR) goggles in hospitalized patients. The idea is this: offer patients in pain or distress the opportunity to experience a fully immersive, three-dimensional, peaceful world that can distract them from the boredom, isolation and discomfort of their hospital bed. If you’ve ever used VR goggles, then you know they can overtake your brain and transport you to compelling and striking worlds you’ve never experienced.

For this study, we bring patients to beautiful landscapes in Iceland, transport them to tranquil ocean scenes, tour them through a virtual art studio, and even place them on stage with Cirque du Soleil performers. The visualizations are pretty amazing and transformative. It’s hard to get them out of your mind once you’ve been there. There is extensive evidence that VR interventions like these can help people in distress, so we’ve been excited to offer VR to our own patients.

After months of finalizing our protocol and obtaining approvals, we finally hit the wards with goggle in hand. Our first patient didn’t really get it. “No thanks,” he said. Our second patient wasn’t interested. “No thanks,” she said. Our third patient thought it was a weird psychology experiment. “Sorry, not interested in your experiment,” he said. Our fourth patient got it, but said he was too busy. Our fifth patient had used VR before and understood the concept, but said he didn’t want to get dizzy. Our sixth patient… you get the point.

It took 10 patients on the first day before we found a taker. And even that patient wanted to first watch the Republican Presidential Debate before using the goggles. “Could you come back later, maybe tomorrow?”

It seemed like our virtual reality study had become, well, virtual.

The good news is that most people who actually use the VR goggles really like it and we’ve seen great responses. We believe VR will indeed be effective for inpatients, but it’s not for everyone (no matter how carefully, thoughtfully, or respectfully we present the offer). What sounded like an awesome idea wasn’t immediately awesome to many patients in distress, who are suffering in ways we cannot easily understand unless and until we’re in a hospital bed like theirs.

Conclusion: Some digital health use cases look great on paper, but until you try them in actual people with distress, we can’t know if the application is feasible or acceptable.

#4: Murphy’s Law

You know Murphy’s Law: “What can go wrong, will go wrong.” I promise you this is true with digital health. It’s surprising to see what really happens when digital device and patient are united. Here are some highlights from our own hands-on experiences:

Lost devices: If a patient gets a digital device, you can bet it will be lost before long. In just the first six patients in our arthritis study we had two devices lost forever. Just gone. In another recent example we used a FitBit device around a patient’s wrist. She reported that it fell off her wrist one day and she couldn't find it. She said this while in tears and with great remorse – this wasn’t made up. Somehow, someway, a FitBit just fell off her wrist and disappeared into the abyss.

Can’t charge: We are using a device in one study that uses an inductive charge pad. It’s simple: place the device on the pad, look for the light to turn green, and leave it there until it’s recharged. We’ve learned that some patients are not comfortable with inductive charging. One subject called our research coordinator and said the sensors just weren’t working. Sure enough, our remote monitoring data came back empty – she wasn’t using the sensors. An extensive telephone conversation couldn't troubleshoot the problem, so we sent a team member out to her home to replace the device. It turned out that the device was working fine. The patient was placing the device near the charge pad, but not directly onto of the pad. She didn’t get it.

This may seem like an isolated or extreme example. We might even wonder how this patient could be so easily confused by something as “simple” as an inductive charge pad. But that would be misguided. People are people. And technology is technology. If we’re going to use digital technologies to monitor something as vital as, well, vital signs, then they better be foolproof. We’re not yet there.

Ouch!: We developed a device, called AbStats, that non-invasively measures your belly. The disposable sensor sticks to the abdominal wall with Tegaderm – a common medical adhesive – and has a small microphone on the inside that monitors the sounds of digestion. A computer analyzes the results and helps doctors make decisions about whether and when to feed someone recovering from surgery (for example). We’re using AbStats in our patients to direct feeding decisions and, so far, the device is performing well. But for whom? If you’re a patient who has to pull the sensor off your belly, then it’s not performing very well at that particular moment. We quickly learned that it hurts like crazy to pull Tegadarm off the abdominal wall. Moreover, if you’re a hairy man (or even just a slightly hairy man), it gets worse. Ouch. We all know that the form factor really matters. But even when we know that, we still learn it over and over again.

Even colors matter: We developed an mHealth app called My GI Health that allows patients to measure their gastrointestinal (GI) symptoms, obtain tailored education, and communicate their information efficiently with their doctor. The system works great and has been shown, in peer-reviewed research, to even outperform doctors in obtaining a proper medical history. All good. But we learned that some users did not like the color scheme of our data visualizations. We present symptom scores over a colored gradient, where green means “no symptom” and varying shades of red mean “you have the symptom.” We had to review at least 10 different color gradient schemes before we finally settled on the one users like the most. But even then, after meticulously documenting our decision, we found that some users wanted a “yellow” area between the green and red – like a stoplight. Now we’re trying out more schemes to get it right. The point is this: even when a digital health intervention is mature, it still needs ongoing validation and refinement. Nothing rolls off the assembly line ready to go. And even when it’s working, it’s still not quite working in some cases. This stuff is hard to do.

Conclusion: Expect things to go wrong when device meets human. All the time.

What does this all mean?

This essay might come across as naysaying. It is – to a degree. I’ve become more and more hesitant to blow the digital health trumpet when I see, first hand, that patients lose their devices, can’t charge them, feel stigmatized by wearing them, or just have no interest at all in using them. I also know that for every pessimistic example I’ve described here there are positive examples where digital health is shining. I get it. But we need balance.

We also need hard-fought, meticulous, systematic research to figure out whether, how, and when digital health will provide value. Consider how much evidence a pharmaceutical company must amass before getting a drug approved. Then think about how little we need for a digital device. The bar right now is pretty low for promoting adoption. It also appears there are a hundred-fold more essays pronouncing the success of digital health than there are peer-reviewed studies evaluating their true impact on living, breathing, and oftentimes suffering patients.

We should spend more time with patients. We should interview our patients about their experiences with digital health, improve our devices based on their feedback, and continuously test our technologies in everyday care settings. It’s also crucial to understand how patients interpret their own data. The case of the misleading step count is illustrative: what we presuppose is important may not be important to a patient. This is all pretty obvious stuff. So let’s do it! Next time you read a forward-reaching statement about the glory of digital health, ask yourself whether the author has ever placed a digital device on an actual patient. Digital health is a contact sport – a hands-on science. Let’s keep connecting patients to devices and studying, carefully, thoroughly, and directly, what works and doesn’t work. We’ll get this right in time.